The Best Models, May 2025

Introduction

I rarely mention new AI models because people spam every new release and waste time, even if there’s minimal practical benefit versus ChatGPT. However, I just had my mind blown by a new contender

As I was wrapping up an article on how to use Claude Sonnet 3.7 with Cursor to code, OpenAI released ChatGPT-o3 and Google released Gemini 2.5 Pro

Remember when I said not to ignore Google? Here’s a comment that summarized my argument well:

This makes sense. Google not only has access to unlimited talent and capital, but they also have the entire world’s data at their fingertips

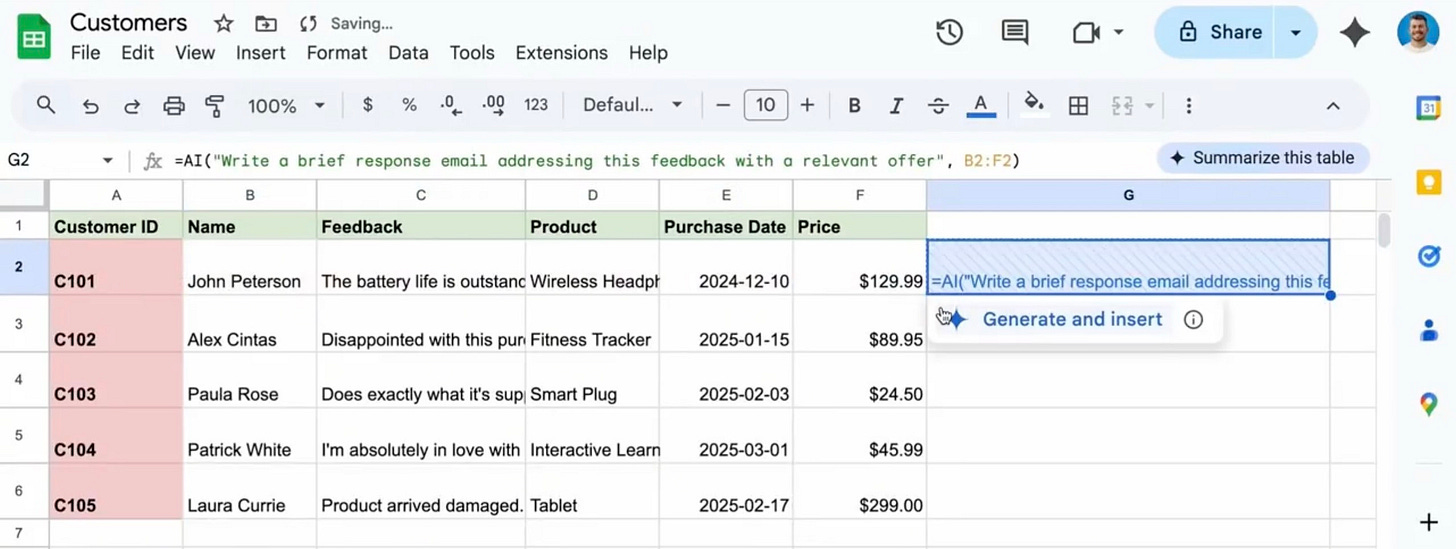

But more importantly, they also have products they can easily integrate with

People forget AI is not just about model performance. It’s also about how well you can deliver an experience to users.

Wherever there is friction, there is opportunity for you to make money. That’s the reason you see so many people making chatbot wrapper apps making 6 figures a month, even though it’s just running ChatGPT under the hood

Wrapper apps prove that product is more important than model performance. Not every user knows how to use AI or integrate it with their workflow. This is why ChatGPT has prompt suggestions and autocomplete

Good products help teach the user what is possible. Not only does Google have products, but they can continue to improve those products because they own all the data being sent and received

We know Google is improving because their newest model ranks the most cost-efficient in both reasoning ability and code generation

Yes, ChatGPT-o3 does perform better, but a 15x cost difference per query adds up fast. So I prefer to use ChatGPT for