AI Solves Any Problem If You're Specific Enough

Prompting Strategies for Newer AI Models

Introduction

I don’t have problems. I don’t think most people have real problems (except for health issues and death)

We could spend time arguing about whether this is true. But I think it’s more important to ask: “is it useful to think like a fox?”

“I can solve anything” produces outcomes. “Life is out of my control” produces cope. Even if both are partially true, only one is useful

When you actually write out the details, 90% of “problems” turn into a checklist. And once it’s a checklist, you can hand it to someone else: To a person. To a system. To AI

Problem or Fog?

A problem has edges:

A) Where you are now

B) Where you want to be

C) The constraints you can’t ignore

If you can’t name these, then you don’t have a problem, you have fog. And a fog feels heavy because it has no edges, so there’s nothing to grab

It sounds like:

“I need to scale”

“Ads aren’t working”

“My onboarding sucks”

“I want to integrate AI”

“I need a better brand”

These are assumptions. Split assumptions from facts:

D30 retention is 8%

Activation rate is 22%

Most churn notes mention “too complex”

Time-to-value median is 2 days

Most businesses owners don’t fail because they’re lazy, unlucky, or untalented. They fail because they’re acting on underspecified problems. And then they pile on tools, tactics, and “AI” on top of that ambiguity, wondering why nothing compounds

A sharp problem creates leverage. A vague problem creates motion without progress

Instead of assumptions, focus on facts first because:

Words are Fake

We pack a lot of details into one word. Instead of saying “round, sweet, red edible thing,” we say “apple”

Language uses shortcuts

Obviously we have to use these shortcuts to communicate, and it’s fine when the stakes are low

But shortcuts also delete details. And sometimes the deleted details are the whole issue

Example:

“I don’t want to start a business. It’s too risky.”

That sounds like a reason, but it’s just “word shortcuts” and labels

What does “business” mean here? A software product? A local service? Consulting? Selling a digital file? A one-person agency?

What does “risky” mean? Lose money? Waste time? Look stupid? Legal exposure? Social embarrassment? Family pressure?

If you don’t clarify your words, your brain fills in the blanks with fear. And AI will fill in the blanks with guesses. Then we call it “hallucination”

We get worried when things are vague, abstract, and compressed, not when they’re specific. Vague problems eat attention.

Sly Fox Tip: Vague language is also how people capture attention, like “I have a big announcement soon, something huge is coming…” or like the first sentence of this post

When it’s unclear, you can’t tell if you’re making progress. You can’t tell what “solved” looks like

So you loop: think → worry → research → talk → repeat

Uncle Faukes Tip: This is also what trauma looks like. When your brain feels like it hasn’t extracted the lessons from a negative event, it’ll run this loop, and the resulting rumination causes depression (source: Nolen-Hoeksema). If you have a past event you can’t get over, look into James Pennebaker’s research on processing it by constructing a detailed narrative and have ChatGPT guide you

Avoid Monkey Mode with “Define the Problem”

I had a client who booked me to “integrate AI into his sales pipeline.”

He asked:

How do I connect a software product to Google Sheets?

I want to summarize hundreds of call transcript PDFs

Now I want to find patterns and label each PDF

Can I put it into my note system?

After a lot of talking, we found the real goal: he wanted to review sales calls to get better at sales

🙈

This pattern is common: people ask for help with a solution they already picked, not with the problem they actually have. This is a certified Monkey Question™

They think they can fumble their way into fixing the real problem by doing their solution. So, they keep asking about their solution instead of the actual problem

After wasting time discussing their solution, we eventually figure out the real problem

If you want to get better solutions, you need to first define the problem:

Clarify Terms: Precisely define the words you’re using. No labels. No “it’s complicated.” No “you know what I mean.”

Explain The Goal: State what you’re trying to achieve and why. Do not explain your attempted solution. Your approach might be wrong. We only care about the outcome.

Inputs, Outputs, Constraints: What data goes in, what comes out, what’s limited (time, budget, privacy, tools, risk)

This forces reality into the open. It prevents clever answers that solve the wrong thing. It prevents tool rabbit holes. It’ll stop productive procrastination and dopamine-ing on complexity

🙉: “I want a transcript-labeling system”

🦊: “I want the top 5 patterns that lose me deals, with quotes, so I can fix them”

This is Where AI Hallucinations Come From

A lot of “AI hallucination” is really “you didn’t specify what you meant”

Example: “Is 9.11 bigger than 9.9?”

“Bigger” could mean:

larger numeric value (9.11 vs 9.9)

more digits (three vs two)

a later software version (9.11 could be after 9.9 depending on versioning rules)

If you don’t define the yardstick, you’re asking the model to guess what game you’re playing

A simple rule: if your question has a hidden measurement, make it explicit

“Bigger numerically”

“Later as a version number”

“Greater number of digits”

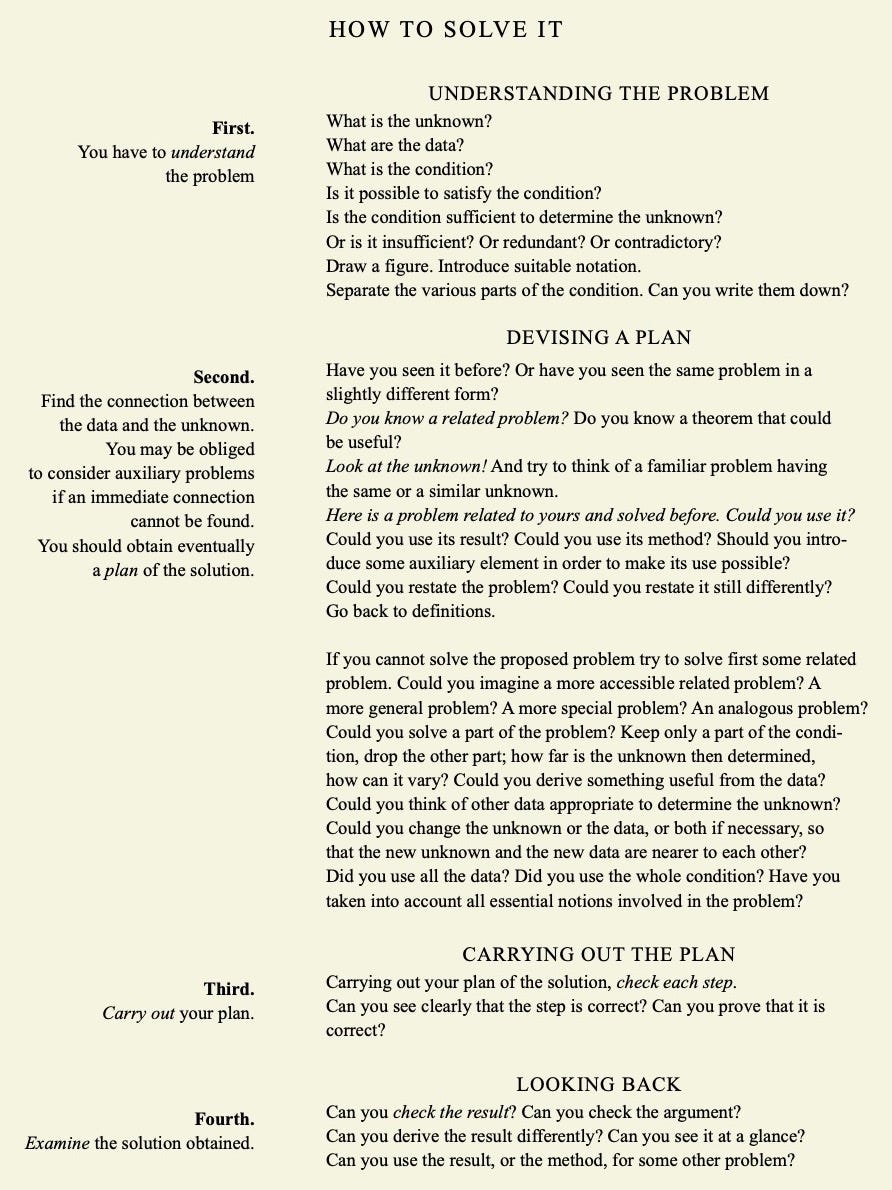

We define the problem first because it makes the correct solution clear to humans and AI. Here’s a framework made by a Stanford professor for solving technical problems.

Notice the first step:

Steps 2, 3, 4 (plan, execute, test) can be done by AI. But not step 1. The reason people struggle using AI is because they want it to define the problem for them. Or they throw random darts at a problem, hoping one will stick

Information vs. Problem Definition

Every time I get on a consulting call, people show up and dump a word salad. They think talking will automatically move them forward

My job is to define the problem. Get the facts, find the edges

Here’s a quick test: If the new information doesn’t change what you do next, you’re stalling. If it changes the next step, you’re learning

Example story:

“My wife is such a… we always fight because I keep forgetting the raw milk… and even if I go get it, she’s still upset…”

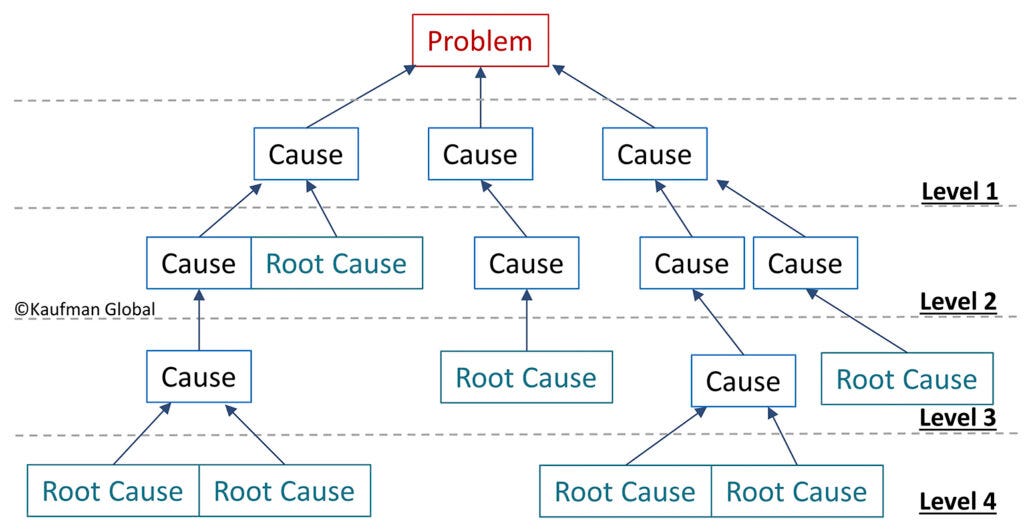

Define the problem by drilling down:

“She’s upset.” Why?

“Because I keep forgetting the milk.” Why?

“Because I’m forgetful.” Why?

“Because I’m overworked.” Why?

“Because layoffs happened and my workload doubled.”

Now the problem isn’t milk. It’s overload, weak systems, and no AI automation. That’s solvable.

Word Shortcuts Hide Details

The most common monkey behaviour is getting stuck with these word shortcuts and labels that hide details. Hiding details is great when using things, but terrible when fixing things

Here’s an easy example: you don’t think about a car engine when you use a steering wheel, right? The details of the metal and wires are hidden from you, so you can focus on driving

But if your car dies, staring at the steering wheel won’t fix it

Same with life: marriage, confidence, career, discipline, success

Bad decisions come from relying on labels. People who believe marriage is “the next step for people who have been together for a while” are moneymakers for divorce lawyers

They haven’t broken down the concept of “marriage,” and instead rely on a vague understanding from culture, movies, and friends. If you treat the label like an explanation, you follow scripts and emotions

Good decisions come from breaking labels into simple actions

Example:

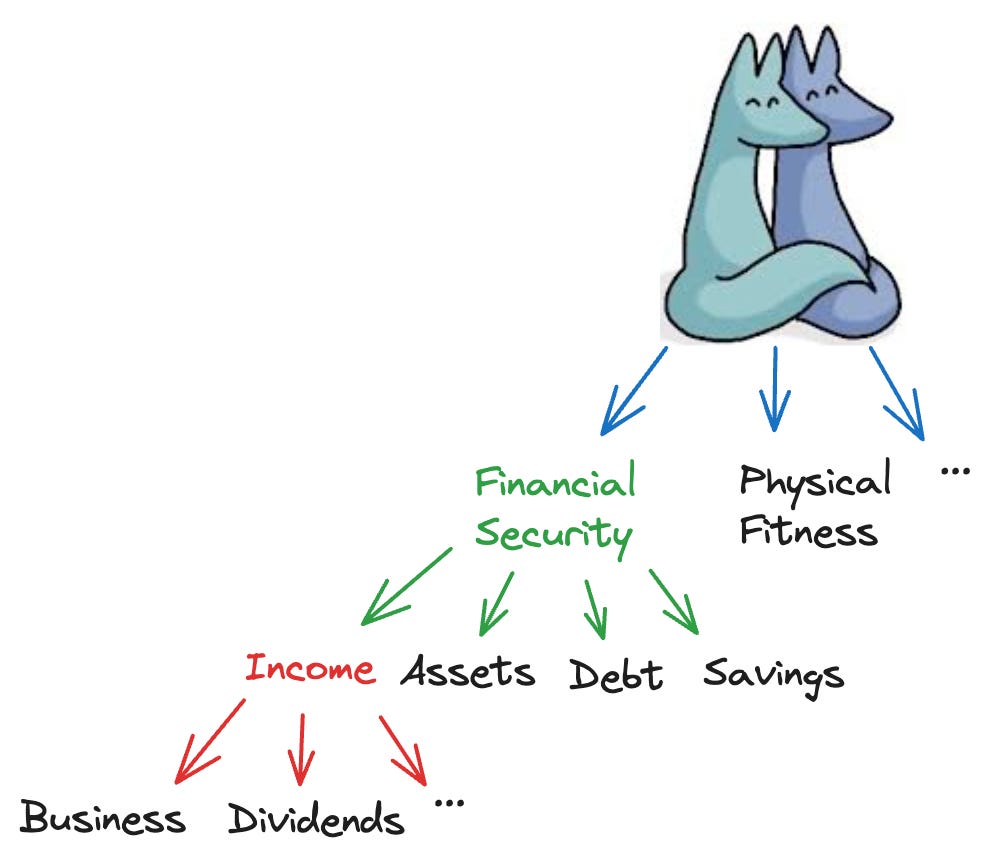

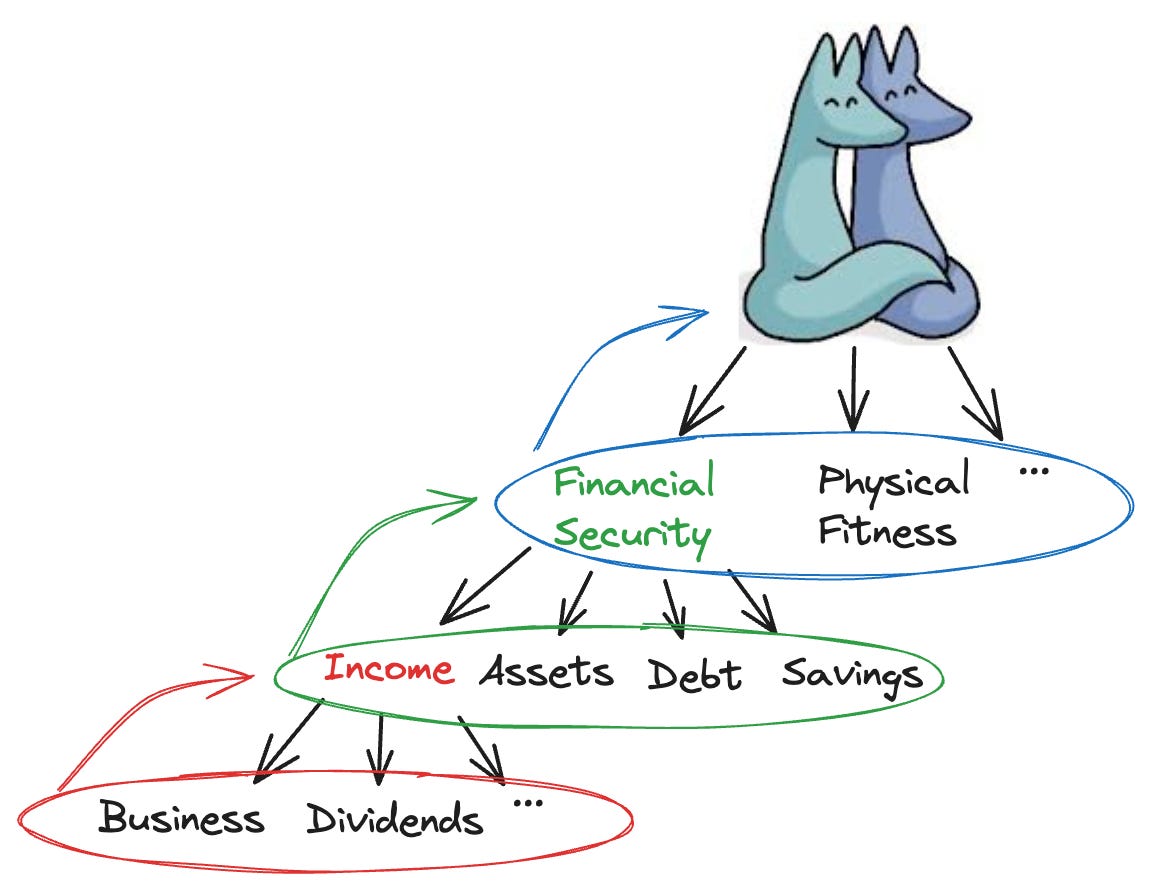

A “good marriage” is a combination of shared values, physical fitness, financial security, and [fill in the blank]

Then break each part into actions:

This is different from “overthinking” because it’s attached to an end goal. When information isn’t attached to a project, it’s endless–you’ll become a scroller, bookmarker, saver

A project has edges (goals, inputs, outputs, constraints), which means you can define the problem, so you can move on to the next steps: plan, execute, test

Sly Fox Tip: Notice how people make bad decisions because they don’t agree on definitions. “Don’t overthink” can mean multiple things:

🙉: Don’t define the problem

🦊: Don’t endlessly seek information outside of a project

Uncle Faukes Tip: This is also why it’s not worth your precious time to argue. People use the same words with completely different meanings, with wildly different objectives, with unclear inputs, outputs, constraints. Arguments are often used to feel better emotionally, instead of uncovering truth

Plus, people don’t even use dictionary definitions, it’s whatever trauma they associate with the word. You can see how people argue endlessly about “high-skilled workers” versus “low-skiled labour” versus “refugees” by using the same word “immigrants.” Three different conversations, three different problems

Smile, nod, agree. As Satoshi once said, “If you don’t believe it or don’t get it, I don’t have the time to try to convince you, sorry.”

Especially now: there’s no reason you should need to convince someone of anything anymore when ChatGPT can come up with arguments for any side and pull up the latest research

Or, if you think ChatGPT is too brainwashed, use a Chinese model like DeepSeek and compare the arguments. Verification is easier than solving so you can double-check if the arguments make sense and ask clarifying questions

Advanced AI Models Require Well-Defined Problems

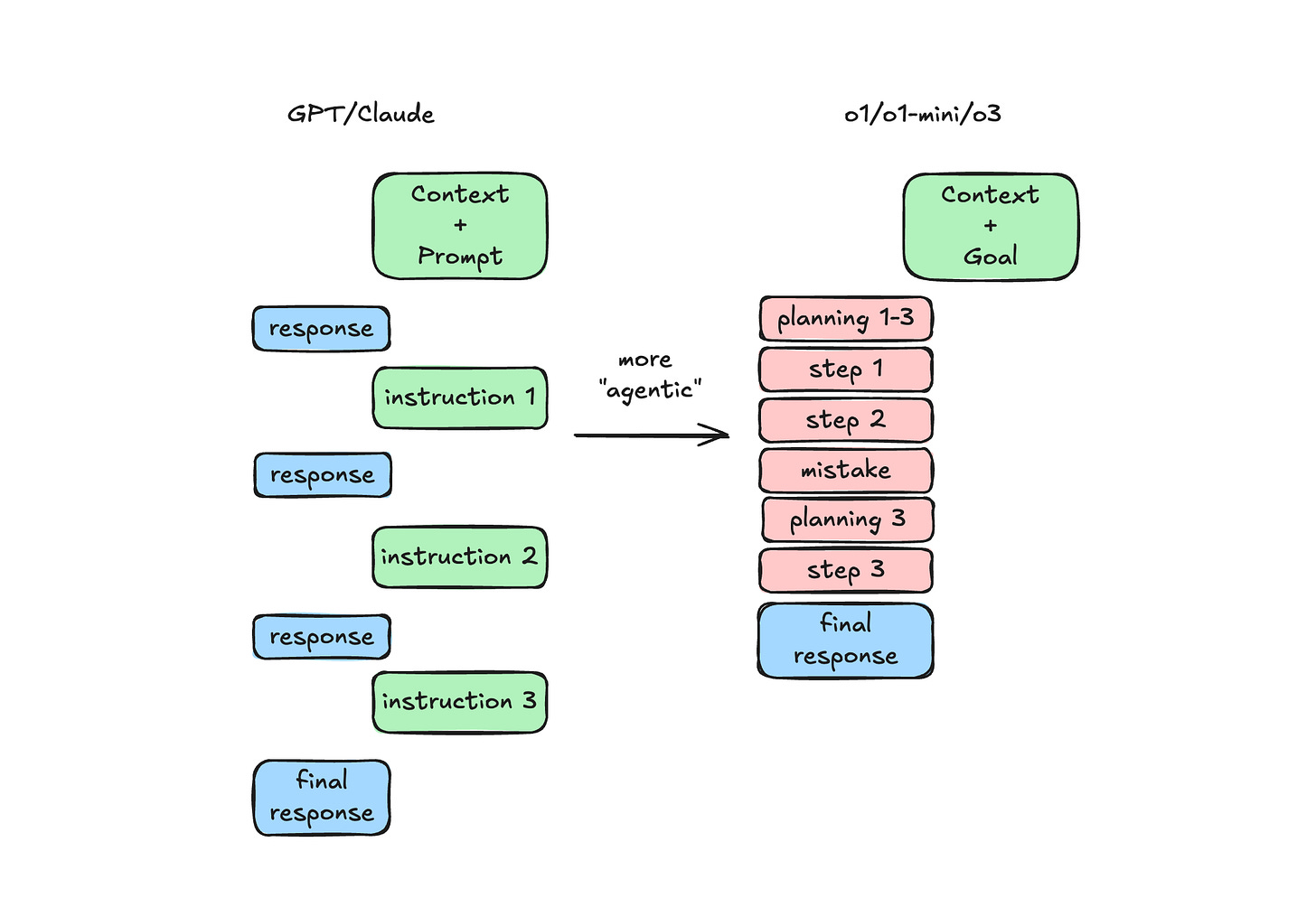

Defining problems is important not just for life, but newer AI models like ChatGPT Thinking Mode. People dislike Thinking Mode because they expect to dump information at it, then say “please fix”

I think people have developed this habit because older chat models like ChatGPT Instant Mode tolerate lazy prompts. It’ll either ask for more context, or you’ll notice something missing from the response

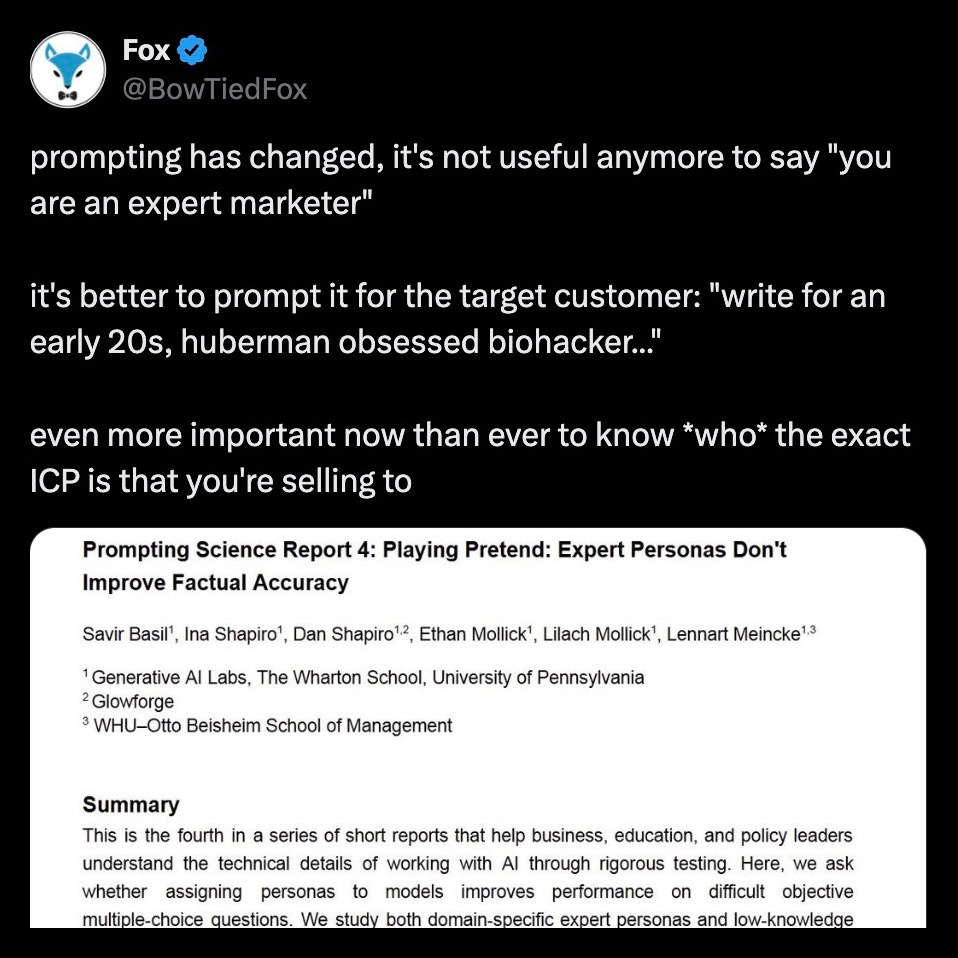

Instant Mode can work with “You are an expert marriage counselor…” because you teach it how to frame the conversation, instead of telling it what you’re trying to solve. You go back-and-forth until you get something useful. The conversation progresses by correcting and expanding on what you want

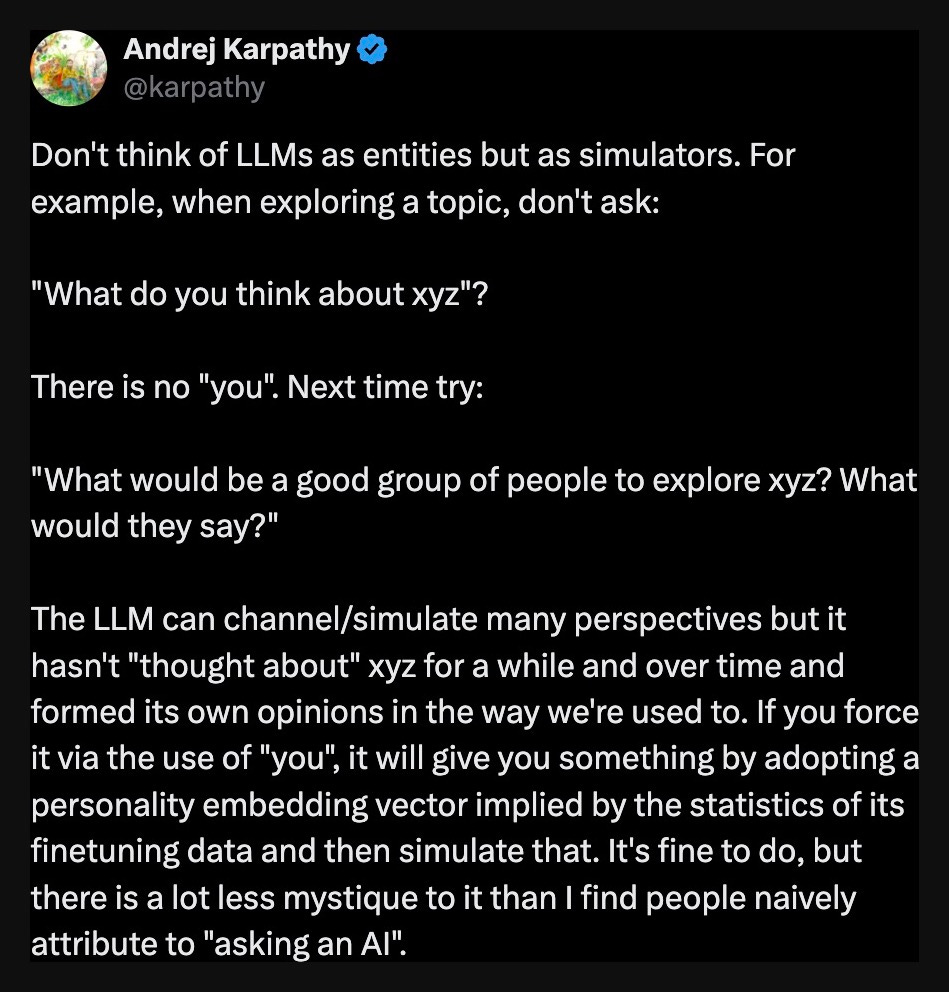

But AI does not have a concept of “you.” So it’s better to ask it, “who would be the the best group of people to explore this topic”, then use that

Take it from one of the founders of ChatGPT:

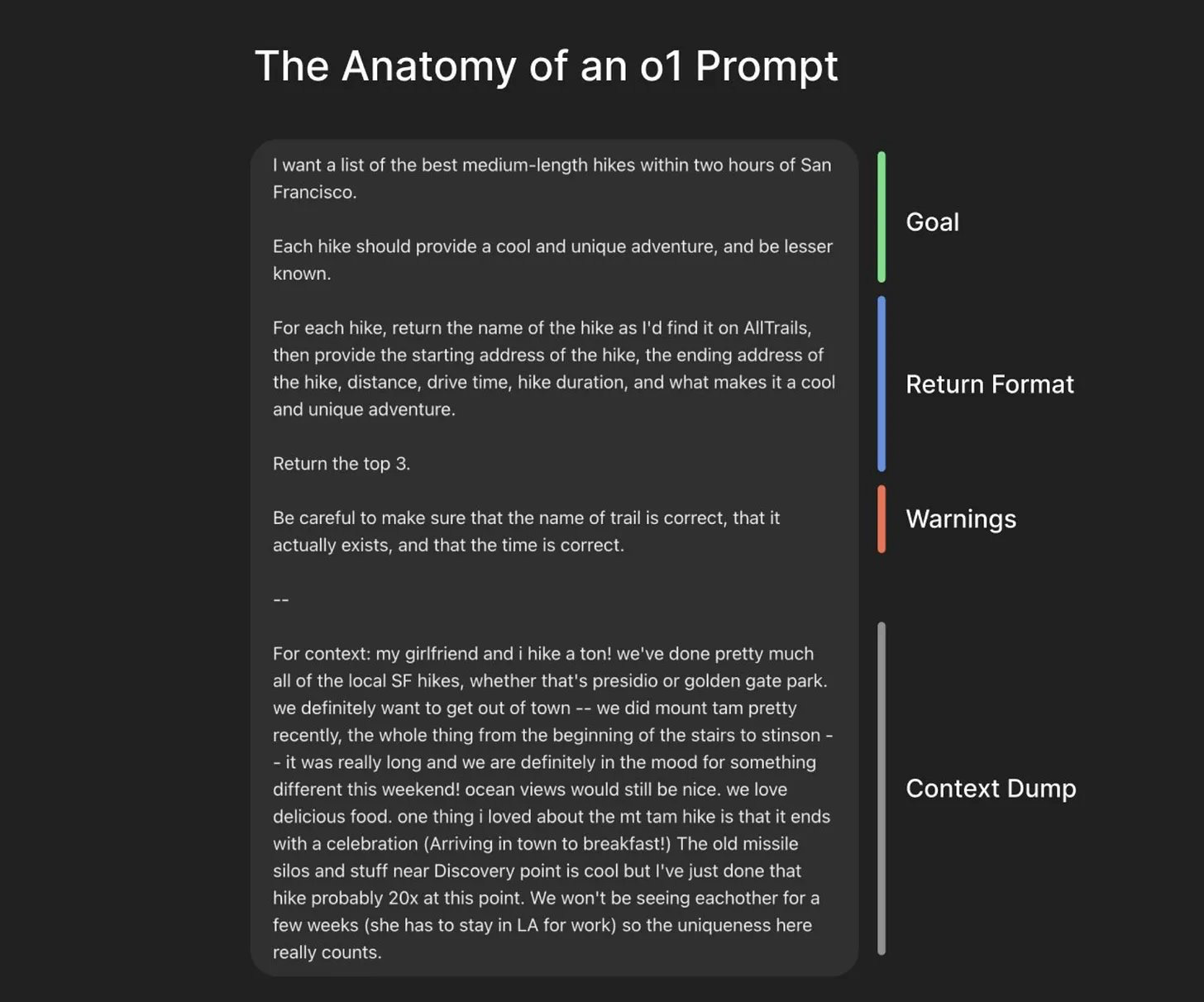

Prompting correctly is especially important for Thinking Mode which requires you to define the problem to unlock its full potential:

This means Thinking Mode is more “agentic” like an advisor than a chatbot. It generates reports, not chat messages. But the benefit is that you’ll get fewer hallucinations, better medical diagnoses, more intelligent explanations for concepts, viable business ideas, and can generate 1500 lines of code in one shot

Treat it like an offshore employee who will implode if you give unclear instructions

Anti-Monkey Template

If you want to use AI to define the problem and create an execution plan, here’s a template you can copy-paste: